How Worried Should We Be About Nudifying Apps?

Key Points

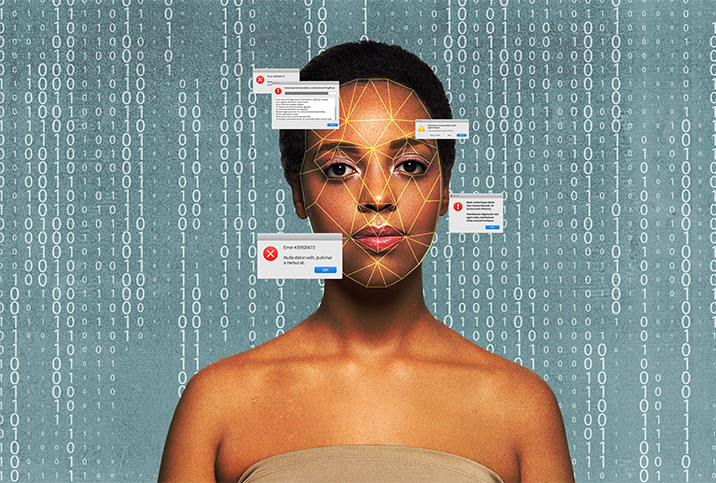

- Nudifying apps, powered by AI, can generate nude images from clothed pictures, raising concerns about privacy, consent and potential misuse.

- Despite legislation around the world to combat "deepfake" images, the problem appears to be getting worse.

- Content producers, marketers and those in the tech industry should prioritize body positivity, ethical behavior and digital consent, according to experts.

We don't yet know where artificial intelligence (AI) will take us. Its impact, however, can already be felt in pornography. It has created open access to nudifying apps that can render a person's naked body from a single clothed image.

The market for nudifying apps is already crowded, with at least 50 of them being variously ranked by the spammy websites that promote them. We won't link to one here, but like anything else on the internet, they are not that hard to find.

The laws surrounding deepfake pornography

After the DeepSukebe app went live in 2020, it reportedly received millions of hits each month, prompting a British member of Parliament, Maria Miller, to call for a debate on whether its images should be banned.

Some of the apps have been withdrawn due to misuse; yet, the technology lives on. The United Kingdom has since drafted an Online Safety Bill to criminalize sending or sharing deepfake porn, but it has yet to be passed.

Naturally, the United States is also exploring legislation to make sharing nonconsensual deepfake pornography illegal and to provide a legal channel that those impacted can access.

"This bill aims to make sure there are both criminal penalties as well as civil liability for anyone who posts, without someone's consent, images of them appearing to be involved in pornography," said Rep. Joe Morelle (D-New York), the author of the Preventing Deepfakes of Intimate Images Act.

While there is no federal law as yet, California, New York, Georgia and Virginia have all banned nonconsensual deep fakes on the state level.

Sensity AI, a fraud-detection company, reported in 2018 that of the deep fakes found online, 90 percent are pornographic clips of women who have not given consent for their image to be used.

Recommended

- Text-to-Image-to-Porn? The Future of X-Rated, AI-Generated Porn: An open-access project has limitless possibilities but also renews debates about consent.

- What Can Artificial Intelligence Do for Your Pregnancy?: AI is a powerful tool capable of helping patients, from preconception to childbirth.

- What Do Sex Dolls Say About Men?: A new study explores the psychology of men who enjoy the company of synthetic companions.

How do nudifying apps remove our clothes?

Nudifying apps work in a similar way to other machine learning systems, in that they have been trained on large volumes of nude imagery, said James Bore, a security professional with the cybersecurity firm Bores in London.

"It's important to note that this is effectively a best guess based on the nude pictures they have been trained on, rather than actually removing clothing from the pictures," he said.

This means any human image you post online can be fed into it and nudified.

'Promoting responsible AI use and safeguarding a person's privacy and autonomy is essential to help reduce our fears around it.'

"Tragically, the only protections are by not posting pictures online or by only posting pictures with baggy clothing, which makes it more challenging for the models to work," Bore said.

The ways to regulate or reduce the practice may expand as AI becomes more and more commonplace.

"Some platforms are now adopting policies or technology designed to deter or detect the use of large language models like ChatGPT," Wired noted in a January 2023 report.

Until technology or global legislation to control nonconsensual deepfake porn exists, there remain complex ethical issues.

What are the implications of nudifying apps?

Some of the negative effects of nudifying apps, according to Nick Bach, Psy.D., a licensed clinical psychologist at Grace Psychological Services in Louisville, include:

- Body image issues. They can contribute to unrealistic beauty standards by perpetuating the idea that a perfect body or specific appearance is necessary to be attractive, an idea that can lead to low self-esteem.

- Consent and trust. They can violate an individual's boundaries and trust, leading to emotional distress and damage to relationships.

- Cyberbullying and exploitation. These apps provide opportunities to maliciously modify and share images without consent. This could lead to online harassment, exploitation and long-term negative consequences in the person's life.

- Legal implications. Nonconsensual use of someone's image, distribution of explicit content without consent or the violation of privacy laws may result in legal actions. One day, doing so could incur severe financial penalties and even prison time.

- Privacy concerns. They can infringe on a person's privacy when used inappropriately and potentially be used for harassment or revenge porn.

Bach said users should consider these implications before using nudifying apps, and that lawmakers and tech companies need to work together to protect people at risk.

"It is crucial to promote ethical behavior, respect for privacy and consent," he said.

Why do people use nudifying apps?

Understanding why people use these apps is crucial for how they can be regulated in the future.

"These apps may tap into deeply ingrained voyeuristic tendencies and fantasies," said Raffaello Antonino, the clinical director and counseling psychologist at Therapy Central in London.

The ability to "undress" someone feels like an exciting alternative to mainstream pornography, giving users the feeling of being in control, as well as realizing their fantasies. However, it's when a private fantasy is made real on a device screen that ethics are breached and boundaries are crossed, he said.

What Is It Like to Date an AI Chatbot?: For some people, companion chatbots offer thrilling possibilities for romantic relationships. AI companionship can fill the emotional needs of people dealing with loss, addiction or social anxiety. Find out more.

"While not all usage is malicious or an act of exploitation, even those who interact with the apps for fun can still contribute to the normalization of nonconsensual image manipulation, thereby blurring the boundaries of digital consent," Antonino said.

Promoting the responsible use of AI

Anyone who works in the tech industry may need to consider how this technology affects them and their respective brands.

"Promoting body positivity, respecting limits, and abiding by legal and ethical rules are essential from a marketing perspective," said Kelvin Wira, the founder of the animation agency, Superpixel, who is based in Singapore.

He recommended content producers and marketers should empower people in order to create a positive online community.

"Responsible digital behavior must be encouraged," he said.

Addressing the fallout of nudifying apps

When a person is "nudified" without their consent, it can have implications for their mental health, especially when you have no control and can't stop it from happening.

"First and foremost, know it isn't about you," said Heather Shannon, L.C.P.C., a licensed clinical counselor and an AASECT-certified sex therapist in Puerto Rico. "It's about that person trying to meet their own desires. It may still feel creepy, scary, invasive or vulnerable to you, and that's understandable."

Get support from family, friends, a coach or a therapist during this time.

It's possible the person creating the deepfakes could have a sex or pornography addiction or need help in some way, Shannon added.

Conversations around consent for AI-generated images

When it comes to relationships, having conversations about consent and boundaries at the beginning can help both parties know where the other stands. It can also help point to any potential red flags if the person has differing or problematic views that may create issues down the line.

During these chats, Shannon advised having a discussion about using AI to alter the images or videos you take or send of each other. That way the person is aware of your boundaries and that crossing them is a definite breach, which could harm trust in the relationship.

Promoting responsible AI use and safeguarding a person's privacy and autonomy is essential to help reduce our fears around it, Antonino said.

"This dialog is critical to ensure that advances in AI don't come at the cost of personal dignity and privacy," he added.