The Terrifying Rise of AI-Generated Porn

In early September, MIT Technology Review published a story about a new app they called "Y." The app, which they declined to name, offered a simple but disturbing service: From just a single photograph, users could face swap a subject of their choice into one of several available adult videos.

How realistic the product is or what its widespread use could be is unknown—the app went dark shortly after MIT Review reached out to the developers for comment. As of this writing, Y is still down, according to the article. However, the unnamed and short-lived program heralds the rise of "deepfake," nonconsensual porn—a new technology that's been quietly exploding in popularity over the past few years, leaving ruined lives in its wake.

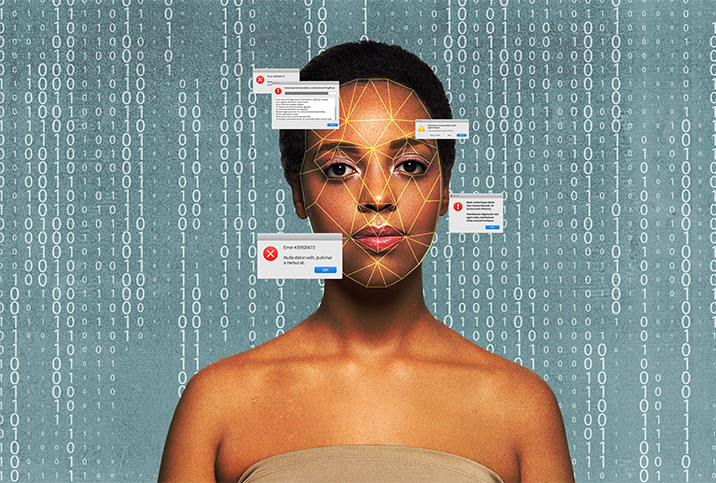

What's a deepfake?

"Deepfake" is a portmanteau of "deep-learning" and "fake." Put simply, it's a method of superimposing someone's likeness onto video footage using AI or machine-learning technology. The result is a video that looks, to varying degrees, like actual footage of the subject.

If you're immersed in meme culture, you've probably encountered this tech already. You might have watched WWII rivals Hitler and Stalin's "Video Killed the Radio Star" duet, for example, or Justin Bieber feud with a fake Tom Cruise.

However, not all deepfake videos are created for lighthearted ends. As the technology progresses—and as deepfakes become more convincing and easier to manufacture—journalists and world leaders have worried about their potential to spread disinformation. The Pentagon has even begun to develop programs to detect and source deepfake videos, which it said "could present a variety of national security challenges in the years to come."

The rise of deepfake porn

For all the speculation on the political threat of deepfakes, there's a much more prevalent danger already here. According to a 2019 study from Sensity AI, 96 percent of the deepfakes online weren't political leaders singing or spreading misinformation—they were nonconsensual fake porn videos.

The term "deepfake" itself was born from the swamps of nonconsensual smut. In 2017, the Reddit user u/deepfakes began posting doctored porn videos featuring the faces of celebrities like Gal Godot and Taylor Swift, according to Vice reporter Samantha Cole. The subreddit r/deepfakes soon emerged around the doctored videos, where users created, sold or requested deepfakes, primarily of famous musicians and actresses.

Some attention was drawn to the issue of deepfakes in 2019, when actress Scarlett Johansson told the Washington Post she was aware of appearing in several deepfaked porn videos. Johannson, who'd previously had her private nude photos stolen and leaked in 2017, wearily wrote that she didn't see much point in seeking legal recourse.

AI versus the girl next door

The problem of nonconsensual AI-generated porn wasn't limited to those in the public sphere, as a 2020 Sensity report on the now-defunct program DeepNude revealed.

DeepNude didn't create deepfakes per se—it produced still images, rather than videos—but the technology and its purpose were similar. DeepNude used machine learning to "strip" photo subjects of their clothes and create realistic approximations of their naked bodies.

The women who were targeted also differed—while the vast majority of deepfake video subjects in Sensity's 2019 report were celebrities, most DeepNude subjects were private individuals.

'I have a big problem with how little we focus on what's really going on, in favor of issues that might be a problem tomorrow but aren't a problem today.'

According to the 2020 report, approximately 104,852 women had their digitally stripped images shared publicly as of July 2020. Of these, 63 percent were victims DeepNude users self-reported as "familiar girls, whom I know in real life."

DeepNude exploded in popularity shortly after its June 2019 launch—the bot and its associated Telegram channels attracted approximately 101,080 members, Sensity reported. Overwhelmed by a glut of new users, the creators of DeepNude shut the project down less than a month after it appeared.

But the tech behind DeepNude lived on. A month after it shuttered, the original creators sold the DeepNude license to an anonymous buyer for $30,000. Reverse-engineered versions of the app can still be found online in open source and torrent form, according to Sensity.

The fight against deepfakes

Australian activist Noelle Martin has fought a public and private battle against deepfakes and fake porn for almost a decade.

In a phone interview, Martin said she came across doctored images of her 17-year-old self after conducting a Google reverse-image search of a selfie. She soon realized that for "potentially over a year," anonymous individuals had been editing her face onto the bodies of adult performers.

Martin's first instinct wasn't to speak out, she said, but to try to get the images taken down as soon as possible. With no legal avenues available at the time, she began reaching out to the sites that hosted the fake nudes to reclaim her image.

More often than not, administrators were unhelpful—or outright abusive, Martin said.

It was this lack of accountability and justice that drove her into activism—making her feel all but forced to speak out, Martin said.

"Sometimes they would respond that they've removed it and then two weeks later it would pop up again," she said. "Some require ID to remove, some won't respond and some will extort you and hold that against you for other favors."

It was this lack of accountability and justice that drove her into activism—making her feel all but forced to speak out, Martin said. Through interviews with the media and a 2017 TEDx talk, Martin began to draw attention to the neglected issue of the real-life harm of fake porn.

As any woman with an internet presence can tell you, speaking out on an issue risks attracting the exact kind of abuse you're trying to fight. Since she took her struggle public, Martin said, fake nudes featuring her face have grown in number, and in 2018, two deepfake porn videos of her appeared online.

"Even when I was speaking out...the perpetrators still continued to target me," Martin said. "In fact, they would use images of me in the media advocating against the abuse."

The real fallout of fake porn

It doesn't matter how convincing a deepfake or fake nude is, Martin told me—the harm it wreaks on your life is very real.

Fake porn is often misrepresented as consensual or "leaked" videos, for example, threatening significant damage to your reputation. Prospective employers aren't likely to scan graphic pornography for evidence of a forgery, Martin said.

The emotional toll of having your identity manipulated for the gratification of anonymous perpetrators is also very real, she said.

"It is absolutely extremely violating to see yourself in that way, to see yourself being absolutely objectified and dehumanized—the fact that there's someone out there who has decided to take it upon themselves to create that," Martin said.

Adam Dodge, founder of EndTAB—a nonprofit that trains institutions to address online abuse—said depression, anxiety and other psychological harm is common among victims of deepfakes and other fake porn.

There's no universal reaction to this kind of abuse, Dodge said. Some victims report a dissociative kind of trauma, similar to sexual assault survivors who have no memory of the incident.

"People are having a reaction akin to a sexual assault survivor watching a video of themselves being assaulted while unconscious," he said.

Recovery can be difficult as well because, despite their actual suffering, victims of image-based abuse often report feeling their trauma is somehow illegitimate, he said.

"They don't feel like they are 'real victims' because they weren't actually physically assaulted," Dodge said.

What can be done?

By hyperfocusing on the speculative threat of political disinformation, lawmakers and the media ignore the real-time victims of deepfake tech, Dodge said. All the evidence shows—at least for right now—deepfakes are harming women, not democracies.

"I have a big problem with how little we focus on what's really going on, in favor of issues that might be a problem tomorrow but aren't a problem today," he said.

Some positive steps have been taken to address the issue. All of the most popular porn sites and social media platforms have banned deepfakes and fake nudes in recent years. On these sites, victims can issue take-down requests for any nonconsensual adult videos—real or manufactured—that feature their likeness.

Despite this progress, images ripped from social media continue to pop up in corners of the internet where deepfake porn is still sanctioned. Several websites oriented around hosting deepfakes remain popular, Dodge said, and no legal recourse is available to their victims.

All the evidence shows—at least for right now—deepfakes are harming women, not democracies.

Martin believes strong legislation is key. She was awarded Young Australian of the Year after her activism led to new laws in her own country criminalizing the distribution of "nonconsensual intimate images." But, Martin said, the laws she's inspired aren't strong enough to help her or someone in her situation.

The internet is a global network that requires global regulation, similar to the international cooperation between law enforcement to combat child exploitation on the internet, she said.

Dodge noted legislation alone isn't sufficient without enforcement. Until creating and distributing nonconsensual fake porn risks real-life consequences for perpetrators, the problem of deepfakes will continue.

"There's no disincentive to creating a deepfake and posting it online because the likelihood you're going to be held accountable or liable for that is very slim," he said.